A primary contributor to a sense of active presence in virtual reality is low-latency, high-accuracy six-degree-of-freedom head tracking (6DoF). Similarly for augmented reality, tracking is a major piece in creating augmentations that are believably anchored in an environment. Driven by the observation that the experience is the product of mixed reality devices, 6DoF is one of the most critical pieces along with the usual suspects like displays, optics, and input devices.

The current generation of consumer VR technologies are based on the concept of outside-in, where cameras or emitters are placed around a tracked volume to capture a user's movement. Issues with room-setup and out-of-box-experience aside, one unfortunate observation is that systems requiring installation are not suitable for the general case of untethered mobile AR/VR platforms.

Today, many developers are acquainted with inside-out tracking via Google's Tango, Apple's ARKit, and Microsoft's Windows Mixed Reality. Partially attributed to a long linage in academic research — particularly robotics — vision-based inside-out has a wide variety of documented failure modes. One motivation to write this post was to look underneath these systems and describe some of what makes inside-out 6DoF a complex problem space. The content of this post is intended for a semi-technical audience with little prior exposure to computer vision.

Defining the Problem

Driven by the massive market for smartphones, low-cost and high-quality image sensors are ubiquitous. It follows that camera hardware underpins a vast majority of inside-out R&D commonly described as visual odometry (VO). Required to orient content between landscape and portrait, nearly all mobile devices also include inertial measurement units (IMUs are a MEMs-based combination of 3-axis accelerometer and 3-axis gyroscope). For reasons described shortly, device localization pipelines also make use of IMUs in a process termed visual-inertial odometry (VIO). These sensors are highly complimentary and form the basis for most systems we see in production environments today.

The overarching problem is known as pose estimation. In particular, the estimation terminology is often found in computer vision literature to describe ambiguity in the solution space, including sensor noise (measurement error) and floating-point imprecision. In consequence, frame-to-frame odometry in isolation is not sufficient to bring solid experiences to AR/VR. Rather than a singular approach, simultaneous localization and mapping (SLAM) describes an active area of research referring to a diverse family of algorithms and techniques used to generate and refine pose estimates while producing a structured representation of the world in parallel. Localization and mapping are distinct steps in the pipeline, so localization is emphasized first.

Approaching Sensor Fusion

As sensor measurements are generally noisy — pending further clarification later in the post — it follows that device localization might be viewed as a probabilistic problem. One common component of a SLAM pipeline involves a Kalman filter, a type of Bayesian inference system that models sensor noise to produce a more accurate estimation of system as a function of time. Such filters are oftentimes introduced in the context of sensor fusion, whereby measurements from physically different sensors are integrated into the same filter (such as structured data derived from images and IMU readings).

In practice, ordinary Kalman filters are not employed since their implementations require their input to be linear and differentiable in nature. A key observation of head rotations — or any movement involving biological actuation — is that the motion is not linear. Instead, a common approach is to use an Extended Kalman Filter (EKF). EKFs demonstrate a mathematical technique to describe how nonlinear equations can be decomposed into a linear system of equations in-between each time step. In short, EKFs cheat the inherent non-linearity of head motion by assuming that individual updates are essentially linear.

An EKF is one example among many: modern SLAM systems implicate many tricks in search of robust 6DoF. For head-mounted experiences, one important area is motion-to-photon latency. In this regard, "magic window" AR experiences powered by ARKit or Tango are allowed to relax this requirement substantially. High latency in a handheld situation will not cause discomfort in a user's inner ear, a-la virtual reality. In consequence, augmentations may be accurately overlayed the same image frame used to compute the device pose, even if the process took in excess of a hundred milliseconds to execute. The resulting effect is that augmentations appear stably glued to the environment at the expense of mild lag.

Understanding Latency and Drift

In the context of real-time display systems, AR/VR HMDs do not have the same luxuries as handheld AR. When pose are required at upwards of 90 frames per second, accurate prediction becomes a core requirement. Luckily, EKFs and related filtering strategies are predicated on the notion of separate observation, correction, and prediction steps. Unfortunately, updating filters with visual odometry data is a lengthy endeavor since camera frames are nominally provided between 30 and 60 frames-per-second, well below rates required for high-refresh displays. Moreover, camera updates are approximately one frame behind when exposure, scanout, and buffering times are added. So, how do we reconcile with systems that demand low latency?

IMUs are at the heart of 3DoF VR experiences. A simplistic description states that accelerometers measure linear acceleration and gyroscopes measure angular velocity (i.e. rotation). High-school physics teaches us that acceleration can be integrated to produce velocity, and velocity can be integrated to produce position. Ah-ha! So this means 6DoF tracking is available to every smartphone in the world? Somewhere along the way, nearly every computer-vision engineer devises a plan to build a million-dollar startup predicated on this idea.

Not so fast. Despite novel MEMs designs and manufacturing improvements, IMUs remain exceptionally noisy sensors and are susceptible to various types of bias (thermal being the most egregious). Fortunately, rotational 3DoF poses are a tractable problem as accelerometers can work to constrain gyro drift in their capability to detect a globally-consistent vector: gravity! Integrating a second time to produce 3DoF translation is where the situation gets messy — quadratically messy, to be specific — in a process called dead reckoning. A buildup of pose estimation error is perceptually evident as drift. In spite of their noisy nature, IMUs produce updates in excess of 1000hz with low readout latency relative to cameras.

An intrinsic property of Kalman filters is an ability for inputs to be assigned weights: how much can new measurements be trusted? A common configuration is to consider visual odometry data with high confidence and inertial data with low confidence, where confidence is a function of measured sensor noise. Overall, predicted poses can be estimated with greater accuracy given frequent IMU updates to the filter given the generally reliable constraints from visual odometry.

On Hardware and Calibration

An EKF-based VIO pipeline is a well-understood approach to pose estimation but decidedly non-trivial to tune. Most software in this domain is at the mercy of hardware. ARKit and Tango both make use of monocular VIO, where robust scale estimation is a notoriously tricky problem. Compounding drift in scale due to a bad initial estimate makes for a thoroughly unimpressive experience and an area particularly impacted by hardware quality. Monocular implementations must estimate metric scale through a combination of physical camera movement (multi-view triangulation) and accelerometer data. At the expense of more hardware, HoloLens and Windows Mixed Reality headsets incorporate pairs of stereo cameras where visual features can be instantaneously triangulated. Specifically, it's worth noting all three systems share an underlying trait that the hardware is directly manufactured or tightly specified by the platform owner.

IMU errors accumulated over time can be asynchronously improved with visual updates, but CMOS imaging sensors on mobile devices are not ideal, either. Commodity smartphone camera systems tend to optimize for single-shot image quality. Like displays, these specs maximize pixels-per-unit-sensor-area to fit high-resolution capture within a mechanically thin optical profile. Hardware specifications like resolution, pixel size, spectrum sensitivity, quantum efficiency, bit-depth, exposure, framerate, shutter type, clock synchronization, optical field-of-view, and lens distortion all contribute to the quality of data understood by VIO systems. Although ultimately a balancing act between cost and performance, strict control over operational tolerances and hardware components is core to estimation accuracy in Tango and HoloLens.

Another equally important piece of the puzzle is the idea of calibration. A cornerstone of input quality, calibration encompasses the analysis of spatio-temporal relationships between sensors and the discovery and correction of individual sensor characteristics which (always) deviate from the manufactured spec. In-factory calibration is a lengthy endeavor involving tools like robotic arms and expensive measurement benches.

Having established that a rigorous spec involving calibrated hardware is a necessity for inside-out, how does ARKit fit in? Many previous teardowns have documented Apple's "premium" component choices, but more importantly, Apple's engineering culture pays close attention to ordinarily small details such as tight synchronization between camera/IMU. One fact not stated previously is that a major source of measurement noise is non-deterministic latency from sensor readout to data availability. While ARKit devices may not undergo a complete calibration procedure, Apple's efforts have likely made runtime system parameters more easily estimated or modeled (e.g. camera lens intrinsics and average latency between IMU motion and corresponding visual update).

Tricky Environments

System design challenges like filtering, latency, hardware data quality, and calibration have been discussed so far, but all pose estimation pipelines face a common enemy: the environment. In the general case, inside-out pipelines can make no assumptions about the spaces, environments, or scenarios they operate in. Rather unfortunately, the world is cluttered with a seemingly infinite variety of geometric features, moving obstacles, and varied lighting conditions.

Visual odometry is predicated on atomic pieces of information being computed from image sensor data. Feature detection is categorized into several high-level buckets like edges, corners, and blobs. Noting the oversimplification, features can be viewed as a property of the visual noise in the environment. The poster on a wall? The plant in the corner? Vision engineers love image sequences captured in these environments. Particularly for AR, the industry is becoming familiar with demos and marketing videos visualizing feature points as tiny circles augmented on the video, as if to communicate "look, it's working!" While the math works out that pose estimation only requires four unique tracked points per frame, practical implementations often produce and consume several dozens.

In practice, inside-out pipelines must work to minimize the impact of the following:

Feature Presence: Despite a plethora of techniques for finding salient landmark data in an image, what if the user is standing in the middle of a white room with no detectable features? Without additional sensors, a lack of identifiable features is universal failure mode for all vision-based tracking approaches. In particular, extremely low-light environments are known failure cases of RGB-only VIO systems.

Lighting Conditions: Mixed lighting conditions are one of the most egregious offenders in tracking reliability. Visual characteristics of an environment change frequently on account of sun position, switchable illumination sources, source occlusion, and shadows. Some recent solutions attack these problems head on by estimating illumination sources or directly tracking camera parameters like exposure.

Feature Reliability: What happens if an algorithm starts tracking feature points associated with a dynamic object, like a TV screen or cat? In vision terminology, tracked features negatively impacting pose estimation are called outliers. One popular family of algorithms to handle outliers is called RANSAC, where inconsistent measurements are discarded through a tight coupling of visual and inertial sensing (e.g. visual features not consistent with IMU readings or current filter state can be thrown away).

A new class of visual odometry eschews features altogether — so called direct or semi-direct methods — although for brevity they are not discussed here. Another point entirely glossed over is the process by which 2D cameras can recover 3D pose.

Mapping The Environment

Orthogonal to localization, algorithms for map building compose the second half of SLAM. While visual mapping strategies differ based on domain — for instance, autonomous vehicles must assume fully dynamic scenes — spatial data stored as a function of time establishes a base solution to several problems in pose estimation.

Armed with the most-expensive sensors, no system is fully immune to drift in the context of frame-to-frame visual inertial odometry. Maps build a database of of previously observed landmarks and their relative poses. An active area of research is adjusting and optimizing local poses over time to create a globally-consistent map, ideally accurate up to the measurement error of the sensors themselves. Returning to areas that have already been observed are implicated in a procedure called loop closure as a method to minimize local drift. For more on this topic, the reader can be directed to read about a family of numerical optimization algorithms called bundle adjustment.

"Have I been here before?"

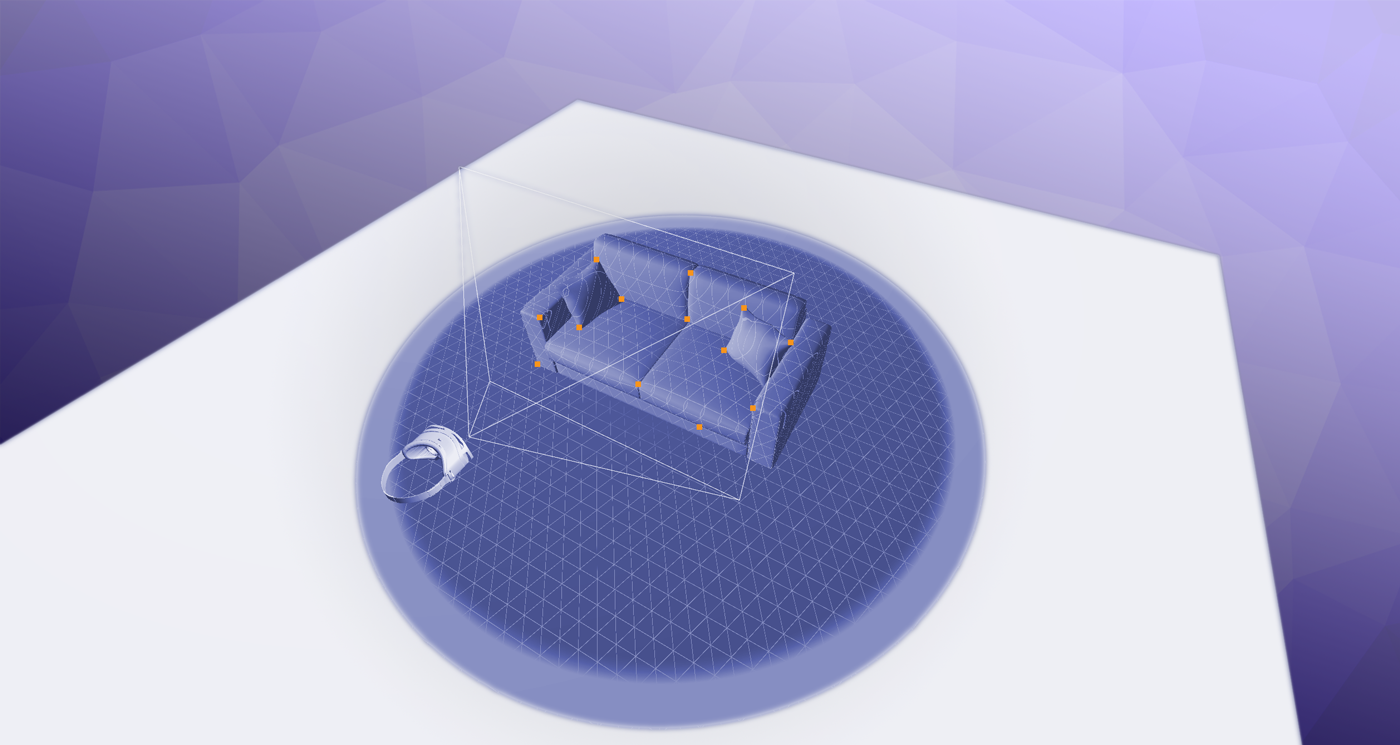

Beyond pose optimization, maps enable frames of reference useful for room-scale and world-space relocalization. Establishing a canonical coordinate system relative to a known starting position is an immediate application (i.e. re-registering content where it was previously placed in the environment). Furthermore, gross tracking failure might be recovered by exhaustively searching the map. Commonly, loop-closed relocalizations are observed in ARKit if the device has moved quickly or over long distances. Absent a full 3D reconstruction, feature points in the map can also power capabilities like ground-plane detection.

A variety of spatial data structures exist to describe maps (generally graphs), nominally built with periodic key-frame images of the environment. Users familiar with the Tango API would know this feature as the "Area Description Format". Microsoft has similar APIs baked into HoloLens and Windows Mixed Reality for spatial anchors and their related serialized formats. Persisting maps across devices for coordinate-system alignment forms the basis of shared multi-device experiences, else content would otherwise be anchored in device-local reference frames.

Relocalization techniques are still actively studied, subject to all the same visual limitations as ad-hoc visual inertial odometry. For instance, a map built in the afternoon might have dramatically different lighting characteristics over one captured in the morning. Lastly, world-scale relocalization is useful for context-aware experiences where systems might disambiguate the difference between home and office modes. A full topic unto itself but worth a brief mention, outdoor relocalization is usually cloud and GPS-assisted and a prime candidate for recent deep-learning techniques (world-scale maps might be a little too big for device memory!).

Conclusion & Outlook

Consumer-ready inside-out tracking is a challenging domain complicated by sub-optimal commodity hardware and unconstrained environments. In the short term, monocular VIO systems are unlikely to compete directly in head-worn systems where the complexity of the problem dictates careful hardware engineering. On the flip side, with dedicated inside-out hardware on the horizon, low-cost proxy systems empower an impressively-sized technical audience with an experimental sandbox for the upcoming generation of untethered AR/VR systems.

So what's next for developers? Beyond 6DoF, handheld devices and tethered VR headsets provide us with the first level of spatial understanding. Whether AR or VR, the industry has been armed with accessible technology to think "beyond the game level," where experiences can be crafted with procedural content derived from the environment. Next-gen applications will undoubtedly employ a wide array of new techniques for human and environmental perception, including semantic understanding, multi-modal input, and medium-native content authoring tools.

For further reading, Michael Abrash has a worthy post on open challenges in the broad AR/VR space; Matt Miesnieks recently published a similar post containing more historical context; and lastly, Tomasz Malisiewicz compiled a comprehensive overview in 2016 on state-of-the-art pose estimation algorithms.

Comment -