Spatial computing and immersive media reaches far beyond games, inclusive of content creation, cinematic storytelling, visualization, and simulation. Game engines like Unity, Unreal, and Godot are flexible enough to support these experiences, but many AR/VR app developers have taken to codifying design patterns and feature implementations by way of plugins and samples in cases where the engine provides limited built-in facilities. For example, I'm speaking of projects like TurboButton, Virtual Reality Toolkit (VRTK), Unity's EditorXR, and Google's Daydream Elements.

Based on a large amount of open-source libraries and middleware, building a base engine layer is not as difficult as it once was. A byproduct of being a relatively new field, spatial computing platforms are built on modern hardware which lmits their need to support legacy graphics APIs and related engine baggage. Armed with emerging clarity over the OpenXR device & application abstraction API, I saw an opportunity for new project catering specficially to spatial computing developers.

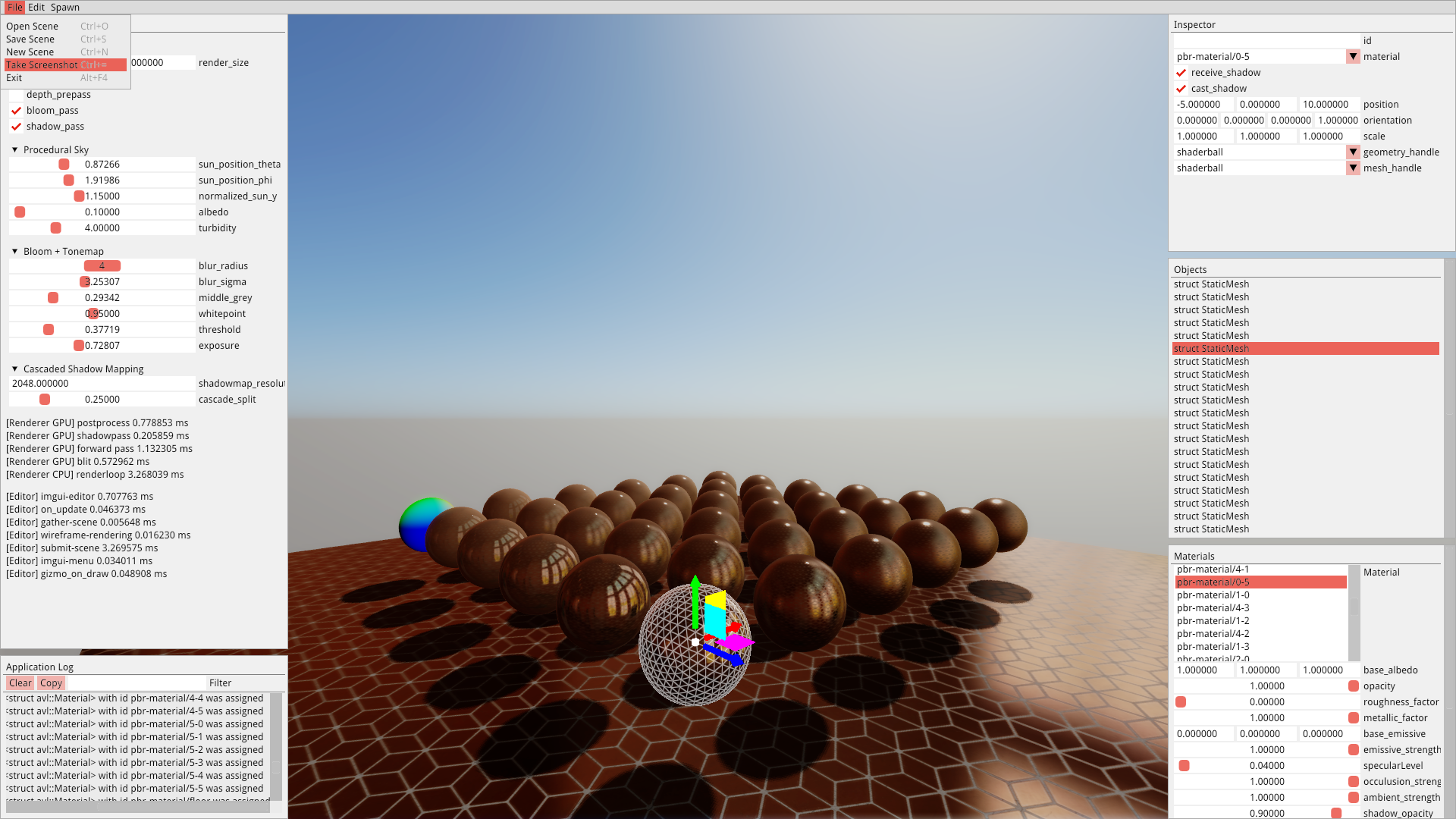

Polymer is the humble start of a desktop-class immersive computing engine with built-in abstractions for AR/VR design and prototyping. The audience for Polymer is tiny and reflects my personal development toolchain: native C++ targeting desktop-class virtual reality headsets on Windows.

Polymer intends to supplement available tools to experience developers, not propose a replacement for well-supported, ship-your-app engines. A core design principle of Polymer is to provide an architecture that invites code-level experimentation and hacking in ways that might be tedious in larger codebases. Examples of this might include directly interfacing with novel hardware, and exploring new input methods or rendering backends.

Polymer is not an effort for building polished experiences nor a generic game development environment with scripting, artist-friendly asset import pipeline, and shader authoring. Alternatively, it provides abstractions useful to AR/VR experience developers such as locomotion/teleportation, reticles, controllers & haptics, and physics-aware interactive objects.

Polymer is an early stage project. Prior development was focused on creating a tiny core runtime capable of supporting complex ideas. With consumer 6DoF standalone devices on the horizon, Polymer's supported platforms will soon include mobile hardware. Excitement around future devices was a motivational factor in open-sourcing Polymer in present form. As the project evolves to include a reusable hand/controller/interaction model, now seemed like an ideal moment to shift focus from core development towards building a critical mass of samples and examples, and to invite community experimentation beyond my personal prototyping activities.

PostScript A: Polymer consists of ideas and code from a wide variety of sources. Notably influence has been taken from Google's Lullaby, Microsoft's Mixed Reality Toolkit, NVIDIA's Falcor, Unity's EditorXR, VRTK, Morgan McGuire's G3D, Marko Pintera's bs::framework, OpenVR Unity SDK, and Vladimír Vondruš' Magnum.

Postscript B: It was a calculated risk to share the name with Google's Polymer web component library since the domains are contextually unreleated.

Comment -