The most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it — Mark Weiser

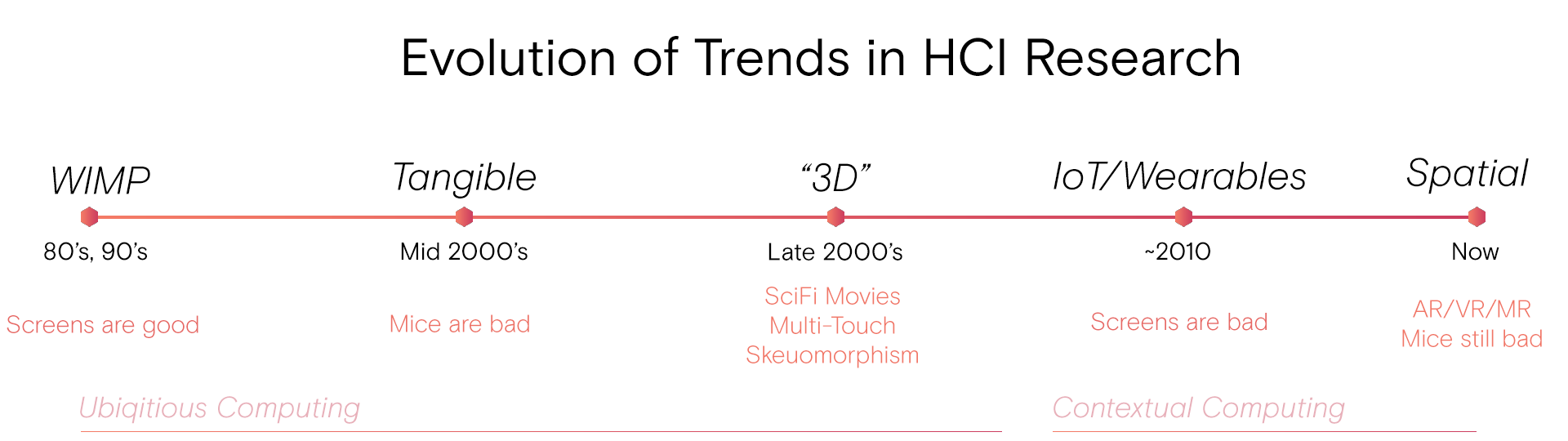

The tree of human computer interaction continues to grow. Looking back, we might observe historic branches with examples like the mouse and keyboard, touchscreens, and 6DoF gaming controllers. Recent trends in AR/VR look through the lens of human understanding with eye, hand, and body tracking. What spatial computing considers is the sum of decades of exploration in HCI along with adjacent fields including psychology, sociology, neuroscience, and machine learning.

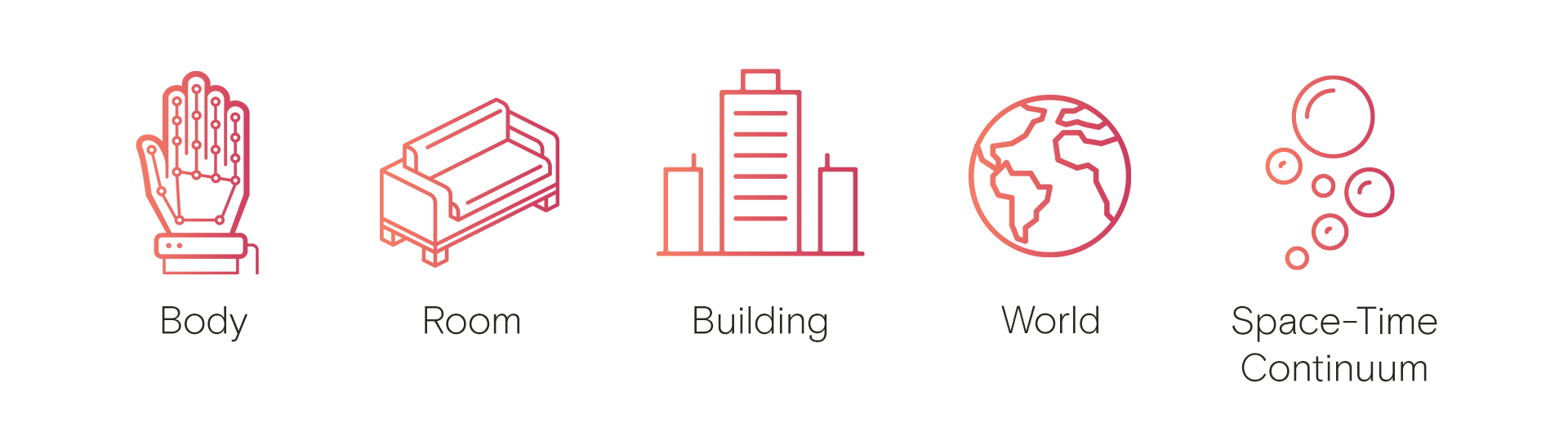

Spatial computing transcends the tactical execution of technology powering AR/VR/MR devices. Looking beyond this, the design and user experience diverges quickly depending on product capabilities and intent: for example, is the product optical see-through or not? Or, should it be worn in public? What gels spatial computing across new product categories is an intent to change how we compute, increasing the utility of computation with specific technologies and design systems that mitigate the vast challenges of when and where.

Take two ends of the spectrum. All-day wearable augmented reality requires specific designs to support diverse environments and situations. Cherry-picking one example, imagine future social scenarios concerning our attention. Do we want to compute while engaging in conversation? (Ever talked to someone wearing AirPods? The social friction is immense). On the other end, gaming or enterprise virtual reality platforms feel most at home in a smaller space, separated from the phyiscal world and commanding the wearer's full attention. Note how both product categories are deeply entangled in body-scale input and the related network of questions around speed, precision, and fatigue.

With respect to input, an AR/VR product might look at one of these scales with a sampling of questions to surface challenges such as:

• Social acceptability & input modality;

• ergonomics including length & effort of interaction;

• and latency & fidelity of the feedback loop.

If these bullet points feel familiar, well, it's because they describe some of the fundamental concerns of human computer interaction. The point is that many of elemental challenges of spatial computing may be understrood through well-described HCI research. But there are new considerations as well.

Many in the augmented reality community are in love with the notion of superpowers. An emerging theory is that pervasive environment understanding coupled with a tight and effortless feedback loop will enable new modes of computation that we haven't yet observed at scale. Another superpower re-examines the impact of embodied cognition on human learning. Research in embodied cognition conjectures that VR might accelerate thinking and creativity by increasing the surface area of possible brain/body experiences.

Hypothesis such as these, backed by classically studied interaction problems, cements spatial computing as the latest branch of HCI. Paradigm shifts and their impact may only be measured in retrospect – but the move from pictures under glass to body-centric computation situated in diverse environments – will hopefully be clear looking back ten years from now. The reader is challenged to reflect on how spatial computing is not a revolutionary movement to re-think computing with new devices; it is a steady march towards frictionless, context-aware computation backed by the rich history of HCI.

The quote from the top is pulled from Mark Weiser's original thoughts on Ubiquitous Computing, sharing remarkable similarities with spatial computing. Taking stock of the current generation of AR/VR products, a computer on your face is the opposite of an invisible technology. While waiting for Moore's Law to catch up alongside new breakthroughs that push our understanding of physics, let's peek at other major innovations that spatial computing must address.

Ingredients for Evolution

Inspired by Schniderman's (et al.) Grand Challenges for HCI, the following list distills a few of the important challenges remaining in popularizing spatial computing products over the next decade.

(1) Friction to engage

A well-designed spatial computing environment will enable humans to express thoughts easily, but platforms need to reduce the friction of transitioning in & out of AR/VR both cognitively, ergonomically, and socially (i.e. the AirPods problem). Furthermore, spatial computing provides an opportunity to fundamentally reconsider the accessibility of computational systems for those with sensory or physical disabilities (for more, please see the Inclusive Design Kit from Kat Holmes).

(2) Input at the speed of thought

Backed by contextual awareness of the environment and personalized knowledge management graphs, how can we implement efficient multi-modal input systems that encourage us to treat computers as collaborators rather than machines? Will these devices ever be less than machines? Traditional screen-based metaphors and interactions may be helpful to establish familiarity, but should ultimately take less precedence as we discover new computational behaviors that transcend the (primarily) screen-based input paradigms in use today.

(3) Seamless across devices, environments, and contexts

This notion is what Timoni West calls, "A really good internet of things [6]." Spatial computing devices contend with myriad cloud services and devices speaking different protocols, both open and closed (what some may call the AR Cloud). These layers should exist invisibly between platform and user. Furthermore, a seamless experience needs to be contextually adaptive across social situations where attention might be fractured between the device and the world.

(4) Frameworks for trust and privacy

AR/VR/MR platforms operate on personal data captured or derived from users and their environment. Platform owners must be pressed to provide clear and accountable systems for auditing collected information, and provide strong safeguards to protect the security of data, both locally and remotely. For more, read The Eyes Are the Prize by Avi Bar-Zeev, The Mirrorworld Bill of Rights by Paul Hoover, and Jessica Outlaw's work on XR Privacy.

(5) Real world valence

Spatial computing systems coexist with reality, where tools, objects, and surfaces have semantics and purpose. Spatial computing should recognize that these elements have intrinsic meaning and utility (this concept based on the original spatial computing ideas outlined by Simon Greenwold in his 2003 MIT Thesis).

(6) Tools made for the medium

Developer experience should be a chief consideration to reduce research and development time. Spatial computing commands novel tools designed from the ground up that capture essential patterns. The first generation of tools offers a solid foundation, with software like Unity's EditorXR and Project MARS, Torch3D, Apple's RealityKit, SketchBox, Microsoft's Maquette, Tivori, and others.

Pervasive adoption of AR/VR/MR platforms is contingent on solving these problems deeply. Spatial computing assists in giving us a practical and theoretical foundation to solve critical interaction issues by chiefly considering the human side of the equation.

An ideal path forward involves identifying more human-scale problems, at both micro and macro levels, rather than immediately looking to technology for solutions. With enough forward momentum in spatial computing, AR/VR/MR technologies might conceivably disappear into the background, bringing us closer to Weiser's vision of invisible computation and what Ken Perlin sees as the magical future of being human.

For more in-depth study of spatial computing principles, check out this index of talks, research papers, and articles.

Comment -